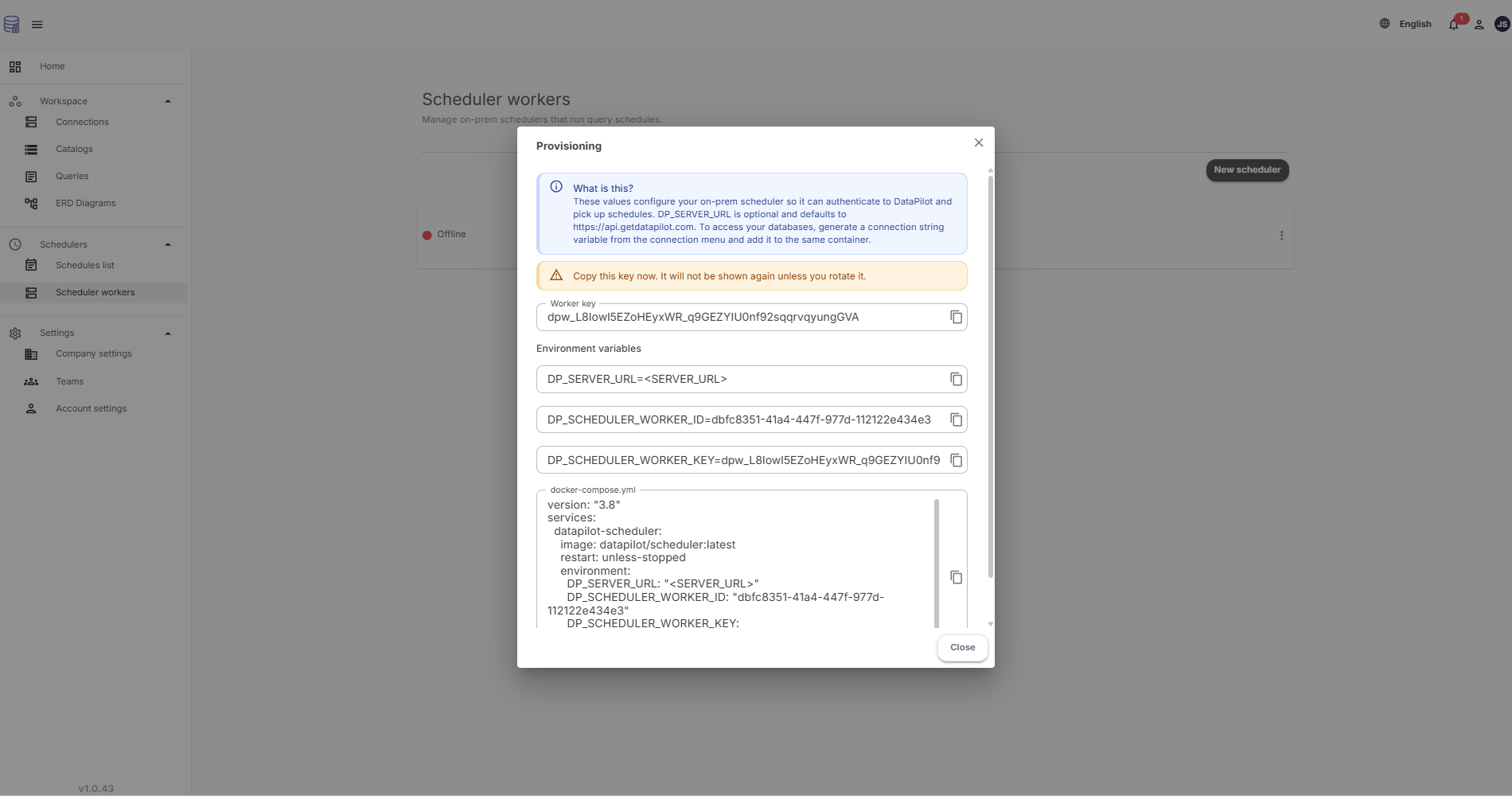

1. Provision a worker

In Schedules → Workers, create a worker and copy DP_SCHEDULER_WORKER_ID and DP_SCHEDULER_WORKER_KEY.

Tip: treat the key like a password and rotate it when needed.

Product

Deploy a worker in your network, connect it to your saved connections via DP_CONN_* secrets, and keep the same workflow for the team: schedule → run → notify → download CSV/XLSX exports in the DataPilot workspace.

Supported databases: PostgreSQL, SQL Server, MySQL, Amazon Redshift. More databases are coming.

In Schedules → Workers, create a worker and copy DP_SCHEDULER_WORKER_ID and DP_SCHEDULER_WORKER_KEY.

Tip: treat the key like a password and rotate it when needed.

For each saved connection, copy the generated DP_CONN_* variable name and set it in the same environment where the worker runs.

Keep secrets on the host (or secret store), never in source control.

Start the container/service, let it pick up scheduled runs, and follow the workspace notifications to download exports as soon as they’re ready.

Same UI loop for everyone: schedules, runs, and notifications.

Each on-prem worker authenticates using two environment variables: DP_SCHEDULER_WORKER_ID (stable identifier) and DP_SCHEDULER_WORKER_KEY (rotatable secret). This lets your deployment keep a consistent worker identity while you rotate access safely.

Scheduler workers list

Show: worker id + rotate key action + last seen/health.

Connections stay defined in the workspace, but the worker needs the matching secret at runtime. DataPilot shows a generated DP_CONN_* variable name per connection so your on-prem worker can run the same schedules near your databases.

Connections list with DP_CONN_* names

Show: DP_CONN_* label + copy action next to connection.

You can monitor worker activity and run history inside the workspace. When a run completes, exports are delivered through the same notification loop your team already uses-so people always know where to find the latest CSV/XLSX file.